System Design / Leadership & Influence

Research Automation with AI

Research at Grab had become too slow, too expensive, and too inconsistent to support evidence-based product decisions. I designed an AI-powered insight workflow that reduced research time from months to minutes, raised insight quality, and freed designers to focus on actual design work.

PROBLEM

Designers were doing research work they weren’t trained or scoped for

Most designers could no longer run foundational research.

Those who tried spent their limited bandwidth on:

planning

note-taking

synthesis

report writing

This work sat outside their role, slowed delivery, and produced inconsistent insight quality.

Vendor research was slow and unaffordable

Most teams avoided research entirely because vendors cost USD 40k–60k per study and required 4–6 months to complete.

Company expected insight quality the org could no longer support

Product and designers were forced to ship features with partial insights or none at all.

This created risk, rework, and low confidence in product decisions.

Outcome

Validated, end-to-end workflow

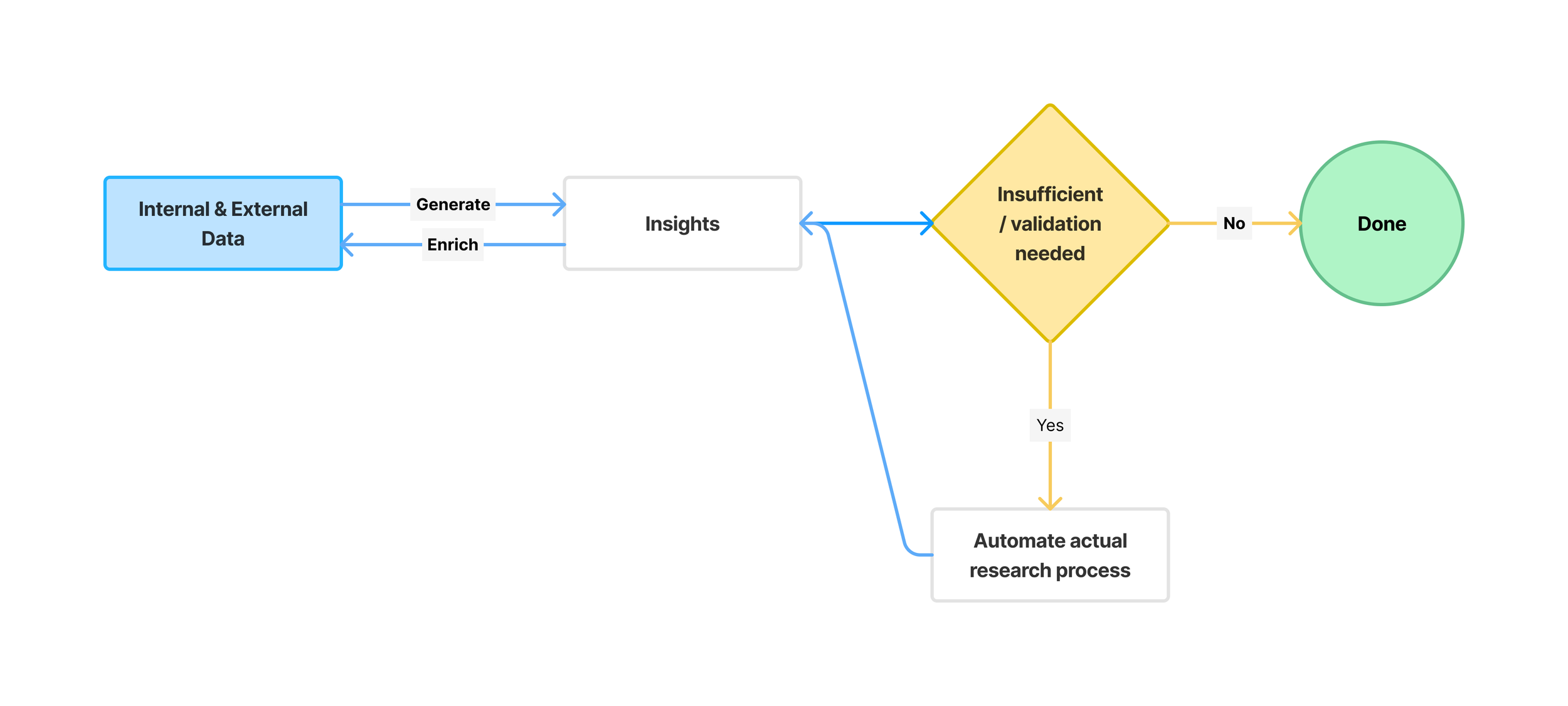

I created a validated, end-to-end workflow that:

automates research planning

generates first-pass insights

synthesizes across internal + external sources

produces structured recommendations

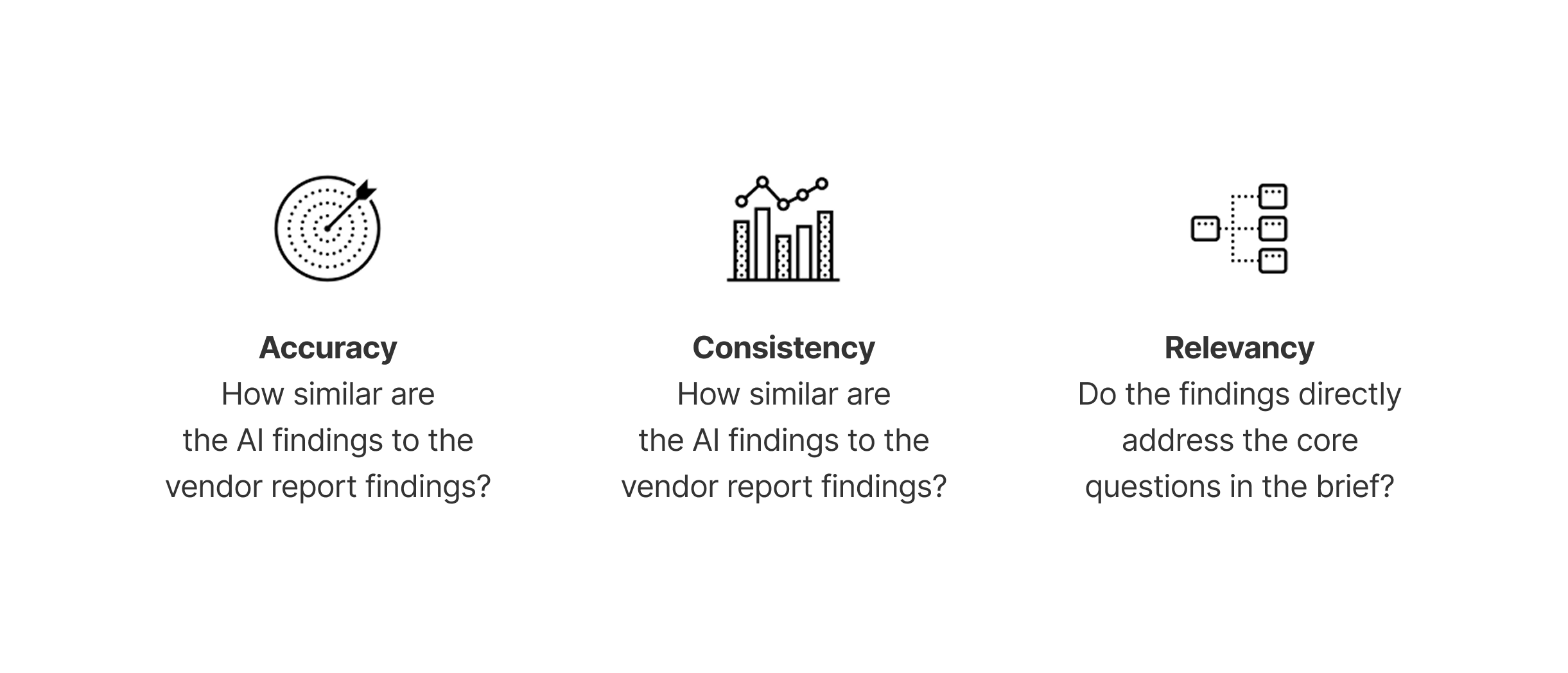

uses a clear validation model for accuracy, consistency, and relevance

It’s now available on the Grab Design internal site, used across teams, and adopted as the reference workflow for AI-assisted research.

My Role

Done with minimal engineering support

Defined the new research workflow from scratch

Evaluated multiple AI engines + selected the safest high-performer

Designed the prompt architecture

Built the insight validation model (accuracy, consistency, relevance)

Documented the full system for the design org

Ran pilot sessions with multiple squads

Guided adoption across teams and design leadership circles

Process

Problem Framing

The sprint direction collapsed after leadership shifted the mandate.

I reframed the problem around the real operational constraints:

no researchers

designers overloaded

vendor research too slow/expensive

leadership demanding insight at scale

I mapped the opportunity space and defined:

“Fast, reliable insights without specialists or vendor dependencies.”

Exploration & Testing

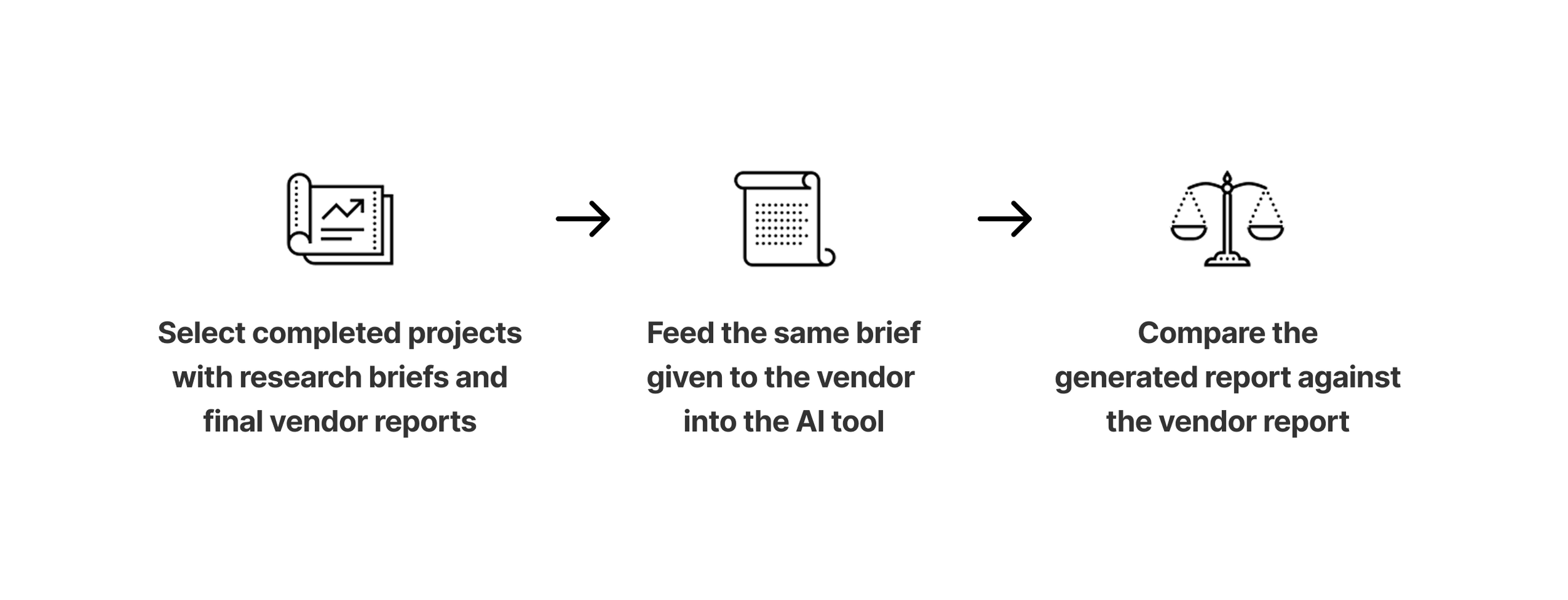

I benchmarked multiple AI engines and tested them directly against vendor reports.

What I evaluated

Gemini Deep Research

DeepSeek DeepThink(discarded due to compliance)

GPT Deep Research

Grok Deeper Research

Internal approved engines

How I evaluated

Accuracy: similarity of AI outputs vs vendor findings

Consistency: repeatability across 5 runs

Relevance: alignment to the brief and core questions

A few key breakthroughs:

AI accuracy only increases when the research brief is high-quality → so I built a prompt to generate better briefs.

Not every research type is suitable → I defined guardrails for human vs AI boundaries.

The fastest path was not building custom tools → use the internal AI platform as the MVP.

This testing became the foundation of the final workflow.

System Direction

Leadership pivoted the mandate after sprint toward a zero-engineering solution. I merged the original vision (tooling + actual research automation) with the new constraint (AI deep research) into a unified roadmap: AI deep research, with actual research automation as the long-term direction.

MVP Deployment

I deployed a practical, production-ready MVP:

Inputs:

Raw research brief

Context + known data

Planned scope of inquiry

AI workflow:

Generate improved research brief

Run first-pass analysis

Synthesize across sources

Structure insights + recommendations

Validated using accuracy, consistency, and relevance model

This replaced months of manual labor with a minutes-based workflow.

Adoption

The workflow spread through:

Internal team demos

Design camps

Design leadership syncs

Cross-team requests from product + business

Pilot support for squads during live projects

It’s now published on the Grab Design internal site as the standard research workflow.

Impact

Time, cost, quality, and org-level Influence

Research cycles cut from 4–6 months → minutes.

Freed designers to focus on design, not research mechanics.

Teams avoided paying USD 40k–60k per vendor study.

Standardized evaluation through accuracy, consistency, and relevance.

Improved trust in research outputs.

Became the reference research workflow across teams, with multiple squads requesting to use it during pilots.

What This Demonstrates

Ability to ship high-leverage tools with minimal engineering support

Systems thinking

Product strategy under ambiguity

Evidence-based design

Prompt architecture

Workflow design

Cross-functional influence

Leadership & Influence

Structuring a 24-Person AI Team

Led one of the largest and most complex teams in the company-wide AI sprint — a 24-person, 4-country Research Automation team. with no structure, overlapping ideas, unclear expectations, and heavy BAU load.

System Design

Cross-Functional Alignment

Leadership & Influence

Superbank + OVO + Grab Integration

Designed the system logic and multi-surface UX for OVO’s savings product, aligning six design teams and multiple financial partners under tight regulatory and launch constraints.

UI & Interaction

Growth & Conversion

Product Discovery & Insight

Revamping Sign-Up Process

Redesigned the onboarding flow end-to-end to remove friction and clarify requirements, increasing registration success by 2.8×.

Leadership & Influence

Talent Growth

In my journey across 3 leadership roles, I've transformed 4 interns and 3 juniors to seniors and leads. I share unique principles, along with their case studies, that have driven these transformations.

UI & Interaction

Product Discovery & Insight

Growth & Conversion

Adapting More Variants

Scaled the product detail experience to support more variants using a reusable pattern and component updates that preserved clarity and increased add-to-cart performance.